Documentary: Dynex, Neuromorphic Quantum Computing for solving Real-World Problems, At Scale.

The world’s first documentary on Dynex quantum computing was showcased at the Guanajuato International Film Festival, presented by IDEA GTO in Leòn, Mexico, on July 20, 2024.

Dynex Reference Guide

Neuromorphic Computing for Computer Scientists: A complete guide to Neuromorphic Computing on the Dynex Neuromorphic Cloud Computing Platform, Dynex Developers, 2024, 249 pages, available as eBook, paperback and hardcover.

> Amazon.com

> Amazon.co.uk

> Amazon.de

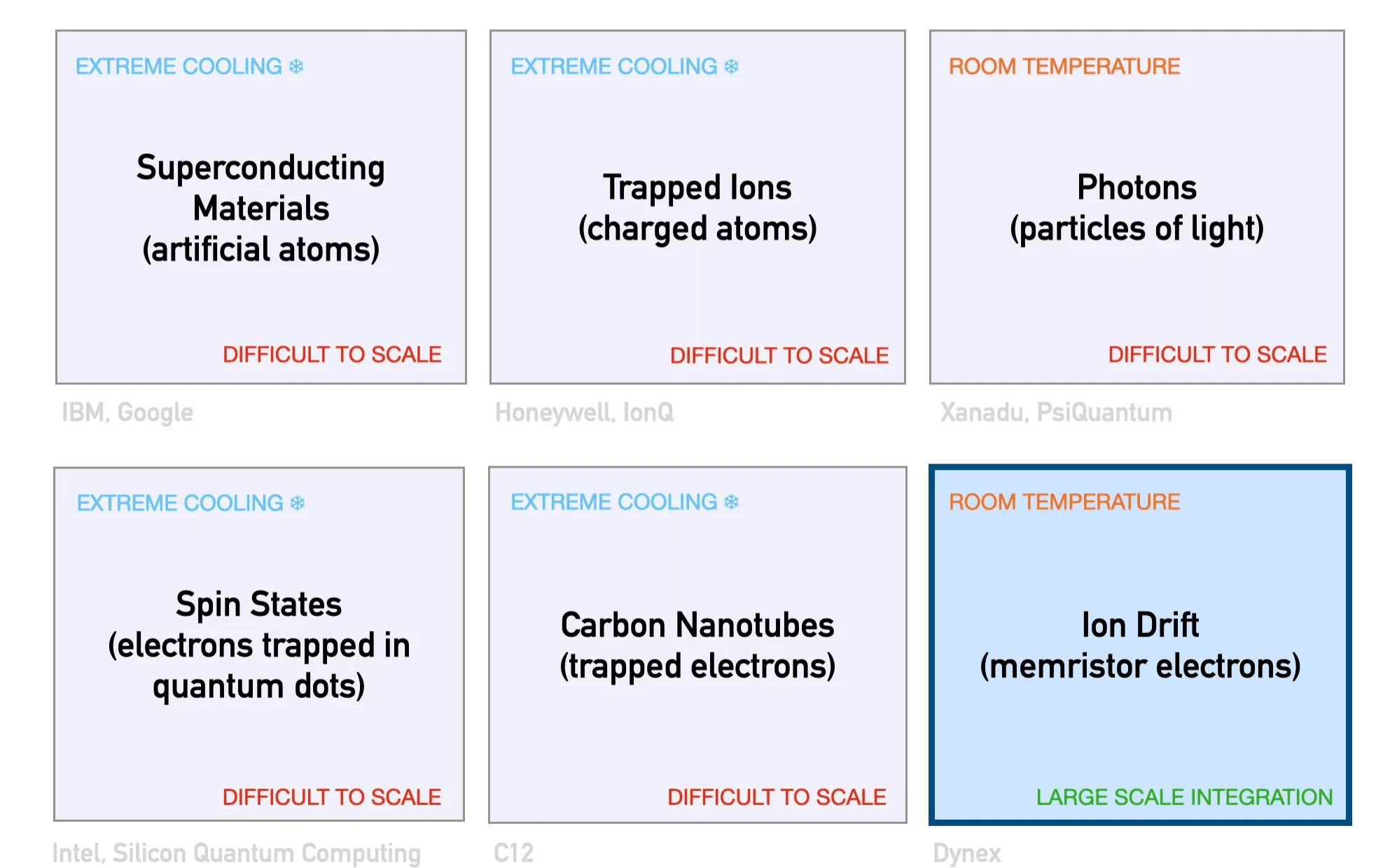

Neuromorphic quantum computing in simple terms

Dynex’s patent-pending neuromorphic quantum computing is a type of quantum computing that utilizes ion drifting of electrons. It works differently from superconducting-based quantum computing by using memristive elements that can quickly react to changes, helping the system swiftly find the best solutions.

This technology is exciting because it leads to computer systems that are faster and capable of handling complex tasks more efficiently than traditional computers.